Low Latency in Live Streaming

Video streaming has become a part of our everyday life, seamlessly integrating into its fabric. Whether we’re catching up on TV shows, participating in virtual classrooms, or engaging in gaming sessions, streaming video is now omnipresent.

However, this ease of access does not come without its drawbacks. Latency is a common and significant issue that can interrupt the smooth reception of video content and reduce interactivity.

But what exactly is this latency? The problem can be compared to a phone conversation with a friend from a distant country, where our interlocutor hears our words even several seconds after we speak them. Such a conversation becomes difficult in the long run when we want to ask something or interrupt the current topic of discussion.

In this blog post, we will delve into the topic of ultra-low latency in video streaming. We will cover what it entails, how it is achieved, and its significance.

What is latency in video streaming?

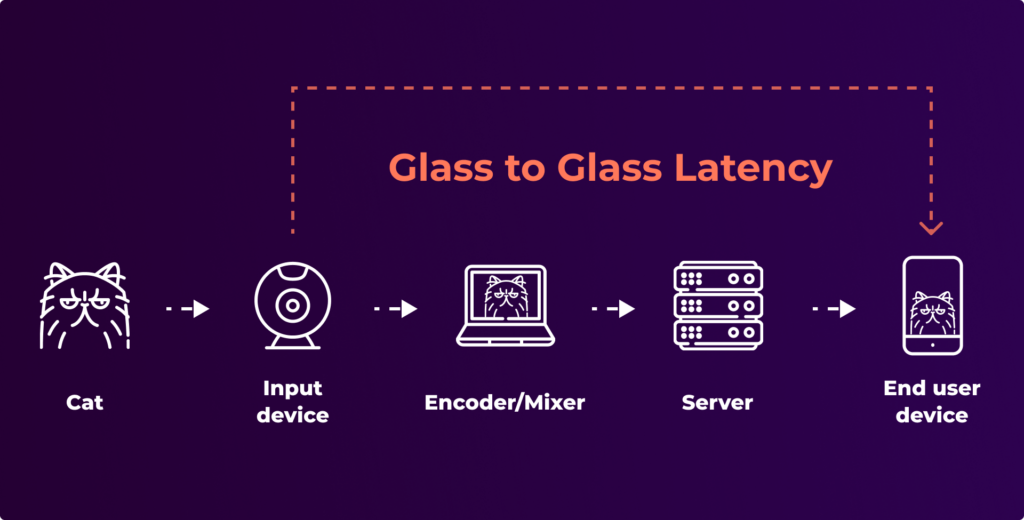

Latency in video streaming refers to the delay from when a video is recorded to when it appears on your screen. In the industry we call it “glass-to-glass” delay. The first glass being the camera, and the second – viewer’s screen. Imagine this delay as the time it takes for a light to be caught by camera and then displayed on your monitor. In the context of streaming, this latency may occasionally lead to annoying pauses or buffering, particularly noticeable during live events like live concerts.

This delay occurs because of how data is transmitted over the internet. Instead of sending a complete video file all at once, it’s broken into smaller data packets – usually containing just a couple video and audio frames. These packets travel via various routes from the broadcaster to the origin server, which then redistribute them to edge servers and again though various routers and switches finally reach their destination.

Minimizing latency is critical in the streaming industry. Audiences expect a seamless viewing experience, free from disruptions. Low latency streaming aims to minimize this delay, enhancing your viewing pleasure by reducing wait times and increasing engagement with the content.

What is ultra-low latency and how it differs from regular streaming?

There is no single, universally accepted definition of what constitutes ultra-low latency streaming, and the truth is that many companies use this term quite flexibly in their advertising materials. However, one can attempt to address the question empirically—what would such streaming be like? Since the main issue caused by these delays is the disruption of interaction between the broadcaster and the viewer, perhaps the most sensible definition would be that we talk about ultra-low latency streaming when the interaction is indistinguishable from standing face-to-face with that person.

So how much exactly? In the case of streaming, we are talking about values below 0.5 seconds, with the ideal range being between 150-200 milliseconds. Any value above this threshold becomes noticeable to participants.

To understand how delays occur in video streaming, we need to trace the entire path that the video takes—from the camera’s sensor through network and server infrastructure, to the device of a specific viewer. Below, we describe the key stages where delays occur.

How latency is created and what causes it?

- Video Encoding

The first thing that happens to data captured by a video camera (or webcam) is the image encoding process. The video data stream is naturally huge, and transmitting it in its original form is practically impossible—we’re talking about several gigabits per second. The video must be optimized using what’s known as an encoder. An encoder (hardware or software), utilizing a specific video codec, creates a reduced version of the stream that can be transmitted over the internet. The higher the image resolution and the desired quality, the greater the delays that occur at this stage.

Software encoders, like x264, are inherently slower. However, one could use codecs based on the power of graphics cards, such as NVENC by NVidia (which works only on GeForce/Quattro family cards), or AMF by AMD (Radeon family cards). Differences in frame encoding times can be up to several seconds, so choosing the right parameters like profiles and bitrates is crucial.

At this stage, it’s also worth mentioning thermal throttling. Most devices, like personal computers, tablets, or smartphones, have no major problem decoding the image created by the aforementioned encoder. This is because all these devices have dedicated chips, which drastically increase the efficiency of such operations while also reducing energy consumption, which is particularly important for mobile devices. However, the encoding process usually does not receive such support, and here the full potential of the available chip is utilized, which can quickly reach high temperatures. To protect the chip or battery, such devices can lower the processor’s clock speed, which directly affects the device’s performance and, for example, the number of frames per second generated. - Data Transport to and from the Streaming Server

At this stage, our stream is already initially prepared, and we can send it to the streaming server. Regardless of whether we use On-Premise software like Wowza Streaming Engine, Storm Streaming Server, or a cloud service like Dacast—on the other end of the cable is a computer that must receive our data, and the path to such a device sometimes leads through dozens of routers and switches from various providers and network operators. A failure of such a router or switch, or an optical fiber that has been damaged by an excavator during earthworks, will impact the final delays of our broadcast causing so-called “packet losses.” In this case, the data will have to be sent again, which of course will cost us time.

The distance between our broadcasting device (encoder) and the streaming server also plays a significant role. Data in computer networks travels at the speed of light, but adding dozens of network devices along the way means that a signal, for example from Los Angeles traveling to Berlin, might need about 200-300ms to reach its destination. Therefore, it is very important that both origin servers (where the stream is delivered) and Edge servers (from where the stream is received by viewers) are relatively close to the target users. - Transcoding

Most cloud services like Wowza Streaming Cloud, DaCast, Storm Streaming Cloud offer real-time transcoding functions. By broadcasting an image in, say, 4k resolution, we receive several versions of it—each with a lower resolution and bitrate. This approach allows a viewer using a phone in the middle of a city to receive a stream in, for example, 720p resolution, which is suitable for the size of a smartphone’s display, and at the same time requires a significantly smaller bandwidth, which is crucial in urban environments and LTE/5G connections. However, the transcoding process is associated with an additional delay of even 2-3 seconds, because the image must be decoded and encoded again—this time in a different set of encoder settings. - Delivery protocols & buffering

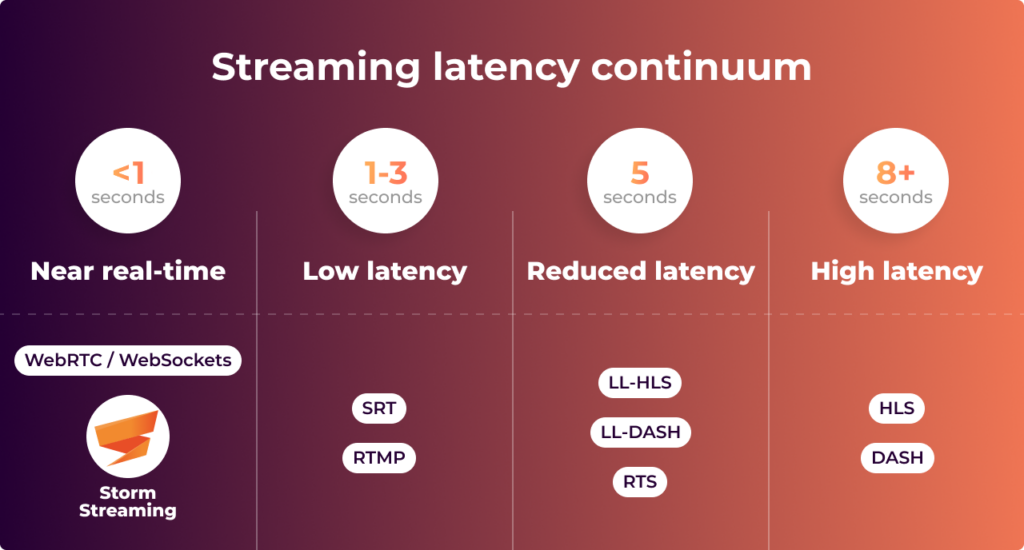

The final stage of the video signal’s journey occurs between the edge server and the viewer’s device. At this stage, we must consider two issues—the first is the choice of protocol that will allow us to deliver the data. The second is the configuration of an appropriate buffer. Most streaming services rely on Apple’s HLS technology. This technology works by creating very short “chunks” of video, which are then sent to the client. These individual chunks (also called segments) are typically about 8-10 seconds long. Unfortunately, this is a number that adds to the total delay already incurred. A similar situation applies to protocols such as CMAF or MPEG-DASH. On the other hand, protocols like WebRTC, or solutions based on WebSocket/Media Source Extensions, send data continuously and it is possible to achieve delays as low as 0.3-0.5 seconds.

We also cannot forget about the buffer on the viewer’s device at this stage. In order to start playing a video segment, a web browser or video player must have a reserve of the stream. This reserve is called the “buffer.” When data is not delivered on time, or there are interruptions in delivery, the video will simply stop and wait for more data. Needless to say, this can be extremely frustrating for the viewer.

How to stream with ultra-low latency?

Here are some key factors to consider for broadcasting with ultra-low latency:

- Efficient Hardware – To broadcast with ultra-low latency, it is essential to have efficient hardware and internet connection that can handle long working hours under heavy load.

- Appropriate Encoder and Codec – Choosing the right codec and its settings is one of the most crucial points on this list. Quality settings, bitrate, resolution, profiles, or tuning will result in the creation of either minimal or significant delays.

- Suitable Streaming Server/Cloud – Not all software offers the same performance, and not every cloud service will provide a sufficiently developed and stable network infrastructure for our needs.

- Stable Network – We cannot guarantee that our viewers will have a stable internet connection, but this does not mean that we as broadcasters should not ensure we have a solid internet link. Unless absolutely necessary, avoid using LTE/Wi-Fi – a wired connection is always more reliable (for example, you can connect a phone to a router using a special USB-C to Ethernet adapter).

- Choice of Protocol – Protocols such as WebRTC, or solutions based on WebSockets / Media Source Extensions offer the lowest latencies and are the most stable. Avoid solutions based on HLS or MPEG-Dash/CMAF, which even in their latest specifications (e.g., LL-HLS) create very high latencies.

Benefits of ultra-low latency

- Interactivity – Low latency allows viewers to react to what is happening on the screen, and the presenter to interact with them. Examples include auctions or sales during video broadcasts, but also various types of voting, questions to the audience, or comments, e.g., read by the presenter. The greater the interactivity, the greater the engagement of the viewers.

- Easier conversations – If we are dealing with a situation where two or more people are having a discussion or conversation, ultra-low latency streaming makes the entire process much smoother and more natural.

- Faster reaction times – Ultra-low latency streaming has significant applications in gaming, where gameplay takes place on a machine located hundreds of kilometers away from the player, yet the experience remains smooth and very accessible. Similarly, when streaming footage from a drone and having the ability to control it at the same time (e.g., in rescue operations).

- Synchronized playback – Lower delays ensure that viewers watching a given event see exactly the same thing regardless of their location. This is hugely important for sports competitions, where sometimes a play that ends in a goal unfolds within just a few seconds.

When is ultra-low latency not worth it?

While ultra-low latency has many advantages, it is not always necessary to strive for it regardless of the circumstances. Primarily, in some applications, it is not needed. For instance, if we are conducting a lecture where we do not particularly include audience participation – lower delays do not add value here. Another issue is cost. Previously mentioned technologies like HLS, MPEG-Dash, or CMAF, despite having significant initial delays, also have an undeniable advantage – they are much easier to scale. This returns to the function of these protocols, where the stream is simply divided into many small video files. These video files are relatively easy to replicate through CDN systems.

If our goal is to achieve the best image quality, we might also want to sacrifice latency in favor of it. Allowing the encoder more time to work with a single frame will result in a sharper, clearer image. We can also use a different codec, such as H.265, which requires more computing power to create an appropriate stream, but its size will be only half of what we would have at the same quality for H.264 (this can be hugely significant in areas where the Internet is of poor quality).

Summary

In the rapidly evolving world of video streaming, ultra-low latency stands out as a pivotal technology that bridges the gap between virtual interactions and real-time engagement. As we navigate through diverse applications from live auctions to high-stakes gaming, ultra-low latency not only enhances the viewer’s experience by minimizing delays but also elevates the level of interactivity, making digital encounters feel almost as immediate as face-to-face conversations. However, the pursuit of ultra-low latency is not without its trade-offs. It requires careful consideration of factors such as cost, the necessity of application, and potential sacrifices in video quality. Understanding when to leverage ultra-low latency—and when it’s overkill—is crucial for optimizing both performance and resources. As streaming technologies continue to advance, balancing these aspects will be key to delivering seamless, engaging, and responsive video content to a global audience.